New Amazon EC2 Trn2 instances, featuring AWS’s

newest Trainium2 AI chip, offer 30-40% better price performance

than the current generation of GPU-based EC2 instances

New Trn2 UltraServers use ultra-fast NeuronLink

interconnect to connect four Trn2 servers together into one giant

server, enabling the fastest training and inference on AWS for the

world’s largest models

At AWS re:Invent, Amazon Web Services, Inc. (AWS), an

Amazon.com, Inc. company (NASDAQ: AMZN), today announced the

general availability of AWS Trainium2-powered Amazon Elastic

Compute Cloud (Amazon EC2) instances, introduced new Trn2

UltraServers, enabling customers to train and deploy today’s latest

AI models as well as future large language models (LLM) and

foundation models (FM) with exceptional levels of performance and

cost efficiency, and unveiled next-generation Trainium3 chips.

This press release features multimedia. View

the full release here:

https://www.businesswire.com/news/home/20241203165432/en/

Amazon EC2 Trn2 UltraServers (Photo:

Business Wire)

- Trn2 instances offer 30-40% better price performance than the

current generation of GPU-based EC2 P5e and P5en instances and

feature 16 Trainium2 chips to provide 20.8 peak petaflops of

compute—ideal for training and deploying LLMs with billions of

parameters.

- Amazon EC2 Trn2 UltraServers are a completely new EC2 offering

featuring 64 interconnected Trainium2 chips, using ultra-fast

NeuronLink interconnect, to scale up to 83.2 peak petaflops of

compute—quadrupling the compute, memory, and networking of a single

instance—which makes it possible to train and deploy the world’s

largest models.

- Together with Anthropic, AWS is building an EC2 UltraCluster of

Trn2 UltraServers—named Project Rainier—containing hundreds of

thousands of Trainium2 chips and more than 5x the number of

exaflops used to train their current generation of leading AI

models.

- AWS unveiled Trainium3, its next generation AI chip, which will

allow customers to build bigger models faster and deliver superior

real-time performance when deploying them.

“Trainium2 is purpose built to support the largest, most

cutting-edge generative AI workloads, for both training and

inference, and to deliver the best price performance on AWS,” said

David Brown, vice president of Compute and Networking at AWS. “With

models approaching trillions of parameters, we understand customers

also need a novel approach to train and run these massive

workloads. New Trn2 UltraServers offer the fastest training and

inference performance on AWS and help organizations of all sizes to

train and deploy the world’s largest models faster and at a lower

cost.”

As models grow in size, they are pushing the limits of compute

and networking infrastructure as customers seek to reduce training

times and inference latency—the time between when an AI system

receives an input and generates the corresponding output. AWS

already offers the broadest and deepest selection of accelerated

EC2 instances for AI/ML, including those powered by GPUs and ML

chips. But even with the fastest accelerated instances available

today, customers want more performance and scale to train these

increasingly sophisticated models faster, at a lower cost. As model

complexity and data volumes grow, simply increasing cluster size

fails to yield faster training time due to parallelization

constraints. Simultaneously, the demands of real-time inference

push single-instance architectures beyond their capabilities.

Trn2 is the highest performing Amazon EC2 instance for deep

learning and generative AI

Trn2 offers 30-40% better price performance than the current

generation of GPU-based EC2 instances. A single Trn2 instance

combines 16 Trainium2 chips interconnected with ultra-fast

NeuronLink high-bandwidth, low-latency chip-to-chip interconnect to

provide 20.8 peak petaflops of compute, ideal for training and

deploying models that are billions of parameters in size.

Trn2 UltraServers meet increasingly demanding AI compute

needs of the world’s largest models

For the largest models that require even more compute, Trn2

UltraServers allow customers to scale training beyond the limits of

a single Trn2 instance, reducing training time, accelerating time

to market, and enabling rapid iteration to improve model accuracy.

Trn2 UltraServers are a completely new EC2 offering that use

ultra-fast NeuronLink interconnect to connect four Trn2 servers

together into one giant server. With new Trn2 UltraServers,

customers can scale up their generative AI workloads across 64

Trainium2 chips. For inference workloads, customers can use Trn2

UltraServers to improve real-time inference performance for

trillion-parameter models in production. Together with Anthropic,

AWS is building an EC2 UltraCluster of Trn2 UltraServers, named

Project Rainier, which will scale out distributed model training

across hundreds of thousands of Trainium2 chips interconnected with

third-generation, low-latency petabit scale EFA networking—more

than 5x the number of exaflops that Anthropic used to train their

current generation of leading AI models. When completed, it is

expected to be the world’s largest AI compute cluster reported to

date available for Anthropic to build and deploy their future

models on.

Anthropic is an AI safety and research company that creates

reliable, interpretable, and steerable AI systems. Anthropic’s

flagship product is Claude, an LLM trusted by millions of users

worldwide. As part of Anthropic’s expanded collaboration with AWS,

they’ve begun optimizing Claude models to run on Trainium2,

Amazon’s most advanced AI hardware to date. Anthropic will be using

hundreds of thousands of Trainium2 chips—over five times the size

of their previous cluster—to deliver exceptional performance for

customers using Claude in Amazon Bedrock.

Databricks’ Mosaic AI enables organizations to build and deploy

quality agent systems. It is built natively on top of the data

lakehouse, enabling customers to easily and securely customize

their models with enterprise data and deliver more accurate and

domain-specific outputs. Thanks to Trainium's high performance and

cost-effectiveness, customers can scale model training on Mosaic AI

at a low cost. Trainium2’s availability will be a major benefit to

Databricks and its customers as demand for Mosaic AI continues to

scale across all customer segments and around the world.

Databricks, one of the largest data and AI companies in the world,

plans to use Trn2 to deliver better results and lower TCO by up to

30% for its customers.

Hugging Face is the leading open platform for AI builders, with

more than 2 million models, datasets, and AI applications shared by

a community of more than 5 million researchers, data scientists,

machine learning engineers, and software developers. Hugging Face

has collaborated with AWS over the last couple of years, making it

easier for developers to experience the performance and cost

benefits of AWS Inferentia and Trainium through the Optimum Neuron

open-source library, integrated in Hugging Face Inference Endpoints

and now optimized within the new HUGS self-deployment service,

available on the AWS Marketplace. With the launch of Trainium2,

Hugging Face users will have access to even higher performance to

develop and deploy models faster.

poolside is set to build a world where AI will drive the

majority of economically valuable work and scientific progress.

poolside believes that software development will be the first major

capability in neural networks that reaches human-level

intelligence. To enable that, they're building FMs, an API, and an

assistant to bring the power of generative AI to developers' hands.

A key to enable this technology is the infrastructure they’re using

to build and run their products. With AWS Trainium2, poolside’s

customers will be able to scale their usage of poolside at a price

performance ratio unlike other AI accelerators. In addition,

poolside plans to train future models with Trainium2 UltraServers,

with expected savings of 40% compared to EC2 P5 instances.

Trainium3 chips—designed for the high-performance needs of

the next frontier of generative AI workloads

AWS unveiled Trainium3, its next-generation AI training chip.

Trainium3 will be the first AWS chip made with a 3-nanometer

process node, setting a new standard for performance, power

efficiency, and density. Trainium3-powered UltraServers are

expected to be 4x more performant than Trn2 UltraServers, allowing

customers to iterate even faster when building models and deliver

superior real-time performance when deploying them. The first

Trainium3-based instances are expected to be available in late

2025.

Enabling customers to unlock the performance of Trainium2

with AWS Neuron software

The Neuron SDK includes compiler, runtime libraries, and tools

to help developers optimize their models to run on Trainium. It

provides developers with the ability to optimize models for optimal

performance on Trainium chips. Neuron is natively integrated with

popular frameworks like JAX and PyTorch so customers can continue

using their existing code and workflows on Trainium with fewer code

changes. Neuron also supports over 100,000 models on the Hugging

Face model hub. With the Neuron Kernel Interface (NKI), developers

get access to bare metal Trainium chips, enabling them to write

compute kernels that maximize performance for demanding

workloads.

Neuron software is designed to make it easy to use popular

frameworks like JAX to train and deploy models on Trainium2 while

minimizing code changes and tie-in to vendor-specific solutions.

Google is supporting AWS's efforts to enable customers to use JAX

for large-scale training and inference through its native OpenXLA

integration, providing users an easy and portable coding path to

get started with Trn2 instances quickly. With industry wide

open-source collaboration and the availability of Trainium2, Google

expects to see increased adoption of JAX across the ML community—a

significant milestone for the entire ML ecosystem.

Trn2 instances are generally available today in the US East

(Ohio) AWS Region, with availability in additional regions coming

soon. Trn2 UltraServers are available in preview.

To learn more, visit:

- The AWS News Blog for details on today’s announcements.

- The AWS Trainium page to learn more about the

capabilities.

- The AWS Trainium customer page to learn how companies are using

Trainium.

- The AWS re:Invent page for more details on everything happening

at AWS re:Invent.

About Amazon Web Services

Since 2006, Amazon Web Services has been the world’s most

comprehensive and broadly adopted cloud. AWS has been continually

expanding its services to support virtually any workload, and it

now has more than 240 fully featured services for compute, storage,

databases, networking, analytics, machine learning and artificial

intelligence (AI), Internet of Things (IoT), mobile, security,

hybrid, media, and application development, deployment, and

management from 108 Availability Zones within 34 geographic

regions, with announced plans for 18 more Availability Zones and

six more AWS Regions in Mexico, New Zealand, the Kingdom of Saudi

Arabia, Taiwan, Thailand, and the AWS European Sovereign Cloud.

Millions of customers—including the fastest-growing startups,

largest enterprises, and leading government agencies—trust AWS to

power their infrastructure, become more agile, and lower costs. To

learn more about AWS, visit aws.amazon.com.

About Amazon

Amazon is guided by four principles: customer obsession rather

than competitor focus, passion for invention, commitment to

operational excellence, and long-term thinking. Amazon strives to

be Earth's Most Customer-Centric Company, Earth's Best Employer,

and Earth's Safest Place to Work. Customer reviews, 1-Click

shopping, personalized recommendations, Prime, Fulfillment by

Amazon, AWS, Kindle Direct Publishing, Kindle, Career Choice, Fire

tablets, Fire TV, Amazon Echo, Alexa, Just Walk Out technology,

Amazon Studios, and The Climate Pledge are some of the things

pioneered by Amazon. For more information, visit amazon.com/about

and follow @AmazonNews.

View source

version on businesswire.com: https://www.businesswire.com/news/home/20241203165432/en/

Amazon.com, Inc. Media Hotline Amazon-pr@amazon.com

www.amazon.com/pr

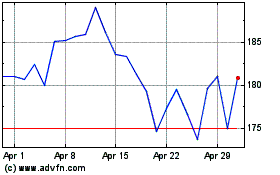

Amazon.com (NASDAQ:AMZN)

Historical Stock Chart

From Nov 2024 to Dec 2024

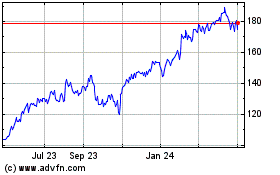

Amazon.com (NASDAQ:AMZN)

Historical Stock Chart

From Dec 2023 to Dec 2024